Urban Transportation Demand Forecasting / Large-Scale Data Analysis (EN/FR)

Context & Objectives

EN / This project focuses on the analysis and valorization of large-scale urban transportation data, using publicly available New York City Yellow Taxi trip records.

The selected topic addresses urban transportation demand forecasting.

The dataset includes over 119 million trips recorded across three years, representing a large, heterogeneous data volume comparable to real-world business challenges (data quality, scale, performance, and structure).

The objective was to build an end-to-end data pipeline, from data ingestion and modeling to exploratory analysis and the delivery of actionable insights to support decision-making.

– – – – – – – – – – – – – – – – – – – – – – –

FR / Ce projet s’inscrit dans une démarche d’analyse et de valorisation de données de transport urbain à grande échelle, à partir des données publiques des Yellow Taxis de New York.

Le sujet retenu porte sur la prévision de la demande de transport urbain.

Le dataset couvre plus de 119 millions de trajets enregistrés sur trois années, représentant un volume de données conséquent, hétérogène et proche de problématiques réelles rencontrées en entreprise (qualité des données, volumétrie, performance, structuration).

L’objectif était de reproduire un pipeline data de bout en bout, depuis l’ingestion et la modélisation des données jusqu’à l’analyse exploratoire et la production de résultats exploitables pour l’aide à la décision.

Tools & Methods

- DuckDB

- PostgreSQL, PostGIS

- Docker

- DBeaver

- Python (SQLAlchemy, pandas, NumPy, scikit-learn, matplotlib, seaborn, joblib)

- Streamlit, GitHub, anaconda_prompt

Step 1 – Database Environment Setup

Download of large-scale data from the official public NYC Yellow Taxi Trip dataset, covering the years 2022 to 2024

Storage of files in a RAW zone acting as a local data lake

Large-scale structural exploration using DuckDB and SQL queries to understand the dataset structure, volume, and consistency prior to any transformation

Deployment of a PostgreSQL / PostGIS Docker container via the command line, including container configuration

Connection to PostgreSQL from Python after installing the connector

Step 2 – Data Modeling and Preparation

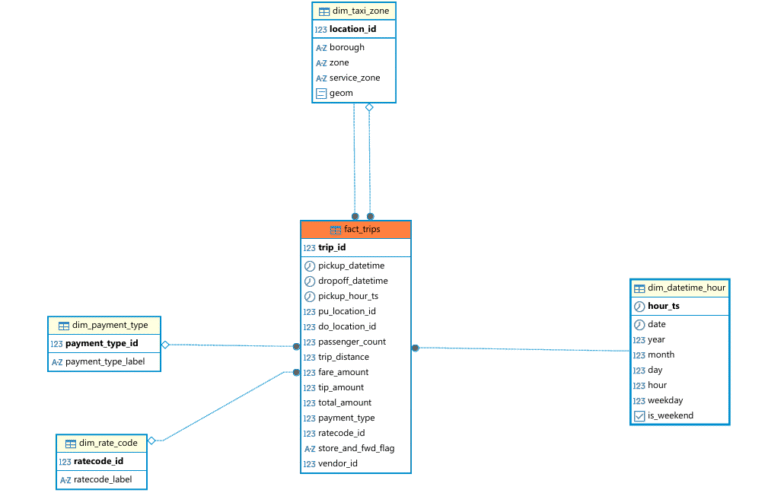

Design of the curated schema based on a star schema architecture (fact–dimension modeling) and definition of an analytical data mart

Creation of database schemas and tables using SQL (DBeaver), then loading parquet files from the RAW zone into PostgreSQL using Python

Enrichment of dimension tables using official reference data (including the Taxi Zone Lookup provided)

Population of analytical tables using SQL queries ( including dimension tables, the fact table, and the data mart)

Step 3 – Data preparation for Machine Learning (Python)

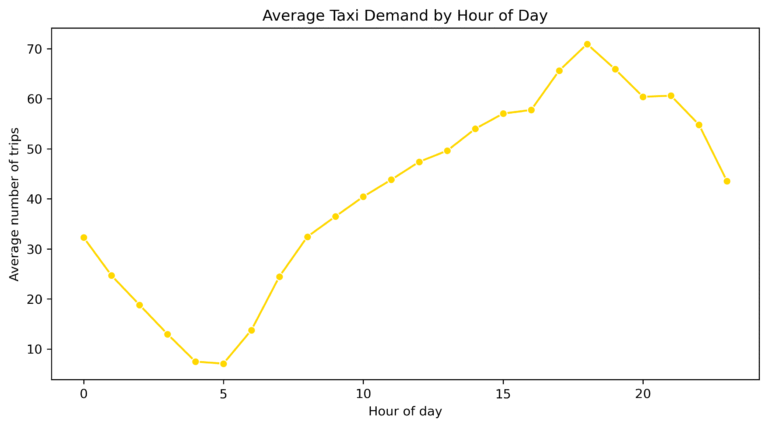

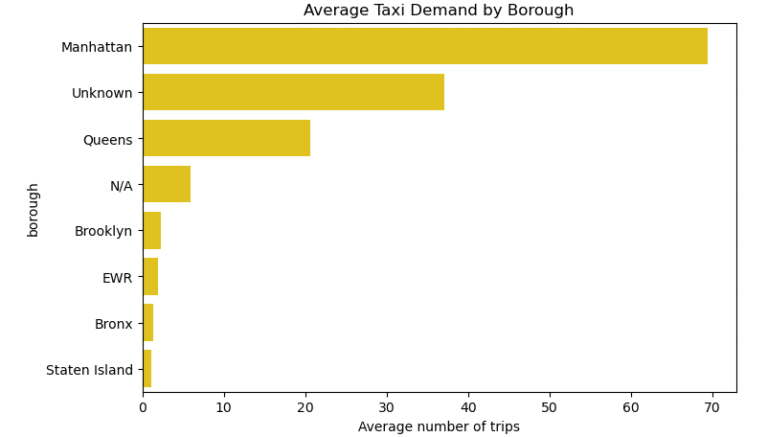

Data cleaning, preprocessing, and exploratory data analysis (EDA) to ensure data quality and to explore temporal and spatial demand patterns

Feature engineering to build a final modeling dataset, including the definition of the target variable (number of taxi trips per hour and per zone), creation of temporal and historical features (lags, rolling statistics), and handling of categorical variables

Step 4 – Machine Learning

Definition of the forecasting problem: predicting the number of taxi trips per hour and per zone

Chronological train / test split to ensure realistic model evaluation

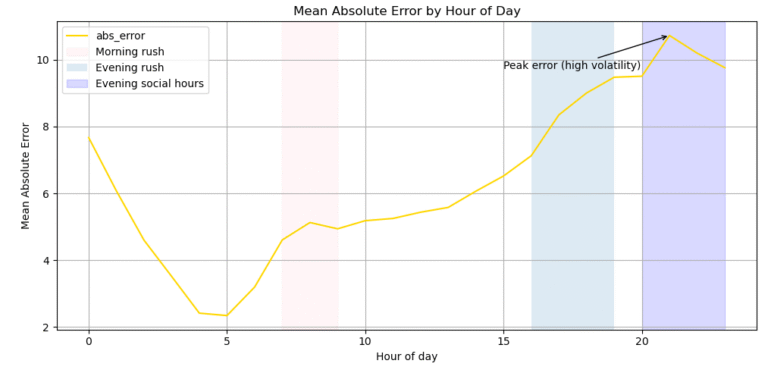

Implementation of a baseline model (naive persistence forecast) as a reference

Training of an initial Machine Learning model using a Random Forest Regressor

Model performance evaluation using MAE and RMSE metrics

Feature importance analysis and identification of model limitations

Step 5 – Forecast Simulation and Application

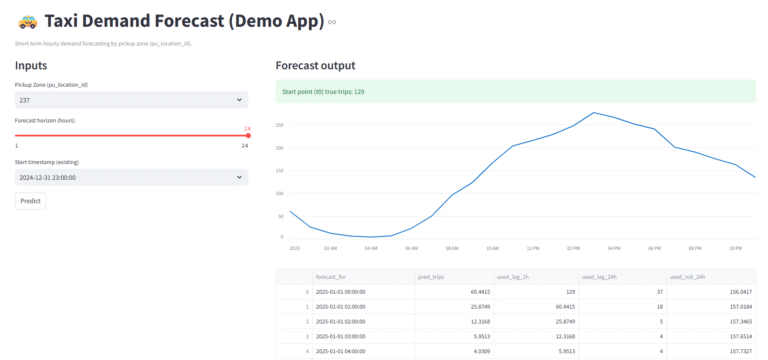

Implementation of a forecast simulation to estimate taxi demand for a given zone based on a selected date and time, across different forecast horizons

Development of an interactive Streamlit application to demonstrate practical usage of the forecasting model

Results & Deliverables

A taxi demand forecasting model was built and is able to accurately estimate the number of trips per hour and per zone based on historical data.

However, the model remains sensitive to external factors not captured in the data, such as weather conditions or exceptional events.

Results were delivered through a comprehensive notebook, as well as a model simulation and a shareable interactive application.

Visual examples illustrating the project :